Disallowed JavaScript file

This means that the URL in question is a JavaScript URL that is blocked by a robots.txt disallow rule.

Why is this important?

Googlebot is progressively moving towards being a crawler that crawls and renders content as standard. This means that they build up the page and render it as a user would see it in the browser; and in order to do this, they need access to all the resource files that are used to build the page.

If these page resource URLs are disallowed in robots.txt, it means that Googlebot may be unable to correctly render the page content. Google relies on rendering in a number of their algorithms - most notably the 'mobile friendly' one - so if content cannot be properly rendered, this could have a knock on effect in terms of search engine rankings.

What does the Hint check?

This Hint will trigger for any internal URL which has 'javascript' for the content type, where the URL or path is blocked by a disallow rule in robots.txt.

Examples that trigger this Hint

Consider the URL: https://example.com/assets/script1.js

The Hint would trigger for this URL if the website's robots.txt file included a disallow rule that stopped search engines crawling the URL:

User-agent: *

Disallow: /assets/How do you resolve this issue?

This Hint is marked 'Critical' as it represents a fundamentally breaking issue, which may have a serious adverse impact upon organic search traffic. It is strongly recommended that Critical issues are dealt with as a matter of high priority.

The solution for this issue is to isolate the robots.txt rule(s) which is disallowing the JavaScript URL, and simply delete these lines from robots.txt.

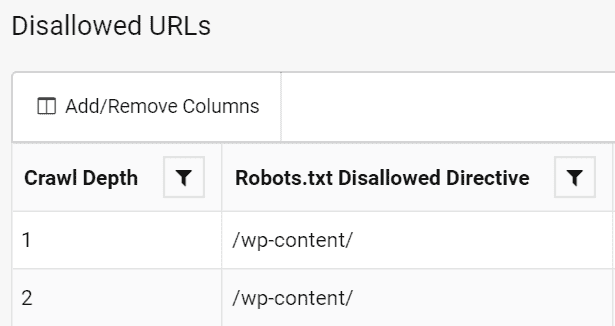

Clicking through from the Hint to the URL List will show you the robots.txt disallow rule that was triggered for the URL.

Sitebulb Desktop

Sitebulb Desktop

Find, fix and communicate technical issues with easy visuals, in-depth insights, & prioritized recommendations across 300+ SEO issues.

- Ideal for SEO professionals, consultants & marketing agencies.

Sitebulb Cloud

Sitebulb Cloud

Get all the capability of Sitebulb Desktop, accessible via your web browser. Crawl at scale without project, crawl credit, or machine limits.

- Perfect for collaboration, remote teams & extreme scale.