Key Considerations in Enterprise SEO Auditing for More Traffic, Less Road Rage!

Published May 6, 2025

Massive thanks to Konrad Szymaniak who joins us today to discuss the complexities and nuances of enterprise SEO auditing.

Enterprise SEO is vastly different from small business SEO. The scale, complexity, and impact of SEO decisions can make or break organic growth. This article investigates SEO audit approaches and best-practices for enterprises, revealing key findings and strategies to improve search performance for large-scale websites.

This article is tailored for SEO professionals, digital marketing managers, and C-suite executives looking to improve their enterprise website’s organic performance.

Contents:

- Why enterprise SEO audits are crucial

- Defining enterprise SEO audit scope

- Role of SEO tools in enterprise audits

- Importance of communication in enterprise SEO auditing

- Concluding views

Why Enterprise SEO Audits Are Crucial

Large sites have millions of pages, making technical SEO a priority

One of the biggest challenges enterprise websites face is ensuring that search engines can efficiently crawl and index the right content. Without proper oversight, valuable pages may go undiscovered, while unimportant URLs consume crawl budget.

The foundational concept of crawling, indexing, and ranking can help enterprise-level stakeholders understand how SEO works. As SEOs, we need to segment these concepts into different categories depending on our initial objectives, particularly when diagnosing indexing issues at scale.

Establishing the correct goals, objectives, and performance assumptions for your enterprise-level audit is critical.

Are we seeing a drop in traffic for a particular product category (eCommerce SEO) because search volume has declined, we’ve lost rankings, or is the crawler spending too much time on low-value URLs rather than prioritising important content updates?

Clustering pages by category, both by product type and crawl importance, is essential for efficient site management and troubleshooting. By grouping related pages, it becomes easier to identify indexing issues, monitor performance trends, and optimise internal linking structures.

On large websites, I prioritise crawl importance before product categorisation, ensuring that search engines allocate crawl budget to the most valuable and frequently updated pages first.

This reverse-order approach prevents wasted resources on low-priority or duplicate content, ultimately enhancing discoverability and overall SEO performance.

Identifying and Addressing Crawl Inefficiencies

A key part of an enterprise SEO audit is identifying whether Googlebot is crawling the right pages at the right frequency. If important pages aren’t getting crawled or indexed, it doesn’t matter how well-optimised the content is.

That’s why understanding crawl behaviour through log file analysis and server-side data is crucial.

Enterprise-level SEO requires stakeholder alignment and business-driven prioritisation

For example, a Marketing Director once reported a traffic drop on key landing pages. The real issue wasn’t a decline in search demand but an inefficient crawl pattern. Before they sent me that report, my independent team and I had already been working with their content team to enhance topic authority for a specific product category cluster.

As a result, newer, more relevant URLs had gained rankings, leading to a shift in traffic for these specific URLs. The director initially misinterpreted this as a loss rather than a redistribution of search visibility.

Crawl Budget Optimisation and Indexability

So, you might be wondering why technical SEO is important here. Well, the initial reports the Marketing Director sent focused on traffic losses, but the root cause was actually crawl budget inefficiencies.

If we had simply acted on those reports without analysing log files and indexing patterns, we might have conducted another generic crawl audit without making meaningful improvements.

Instead, we adjusted and updated our robots.txt file, & internal links to ensure the crawlers prioritised the right URLs.

Crawl budget optimisation is about directing crawlers towards high-value, revenue-driving content.

That’s why enterprise SEO audits must be grounded in technical analysis rather than surface-level traffic trends.

Defining Enterprise SEO Audit Scope

A successful enterprise SEO audit must be structured around a clear set of goals

During my career, I have had to make difficult assumptions based on the objectives the Digital Marketing department was trying to achieve. What some SEOs consider important, others might not. The key is not to rely solely on what tools tell you but to apply common sense.

For instance, if the goal is to assess whether Googlebot can crawl the site, we would examine robots.txt, sitemaps, and links to understand why some pages may or may not be crawled.

Editor’s Note: You might also find this article useful → Improving Crawling & Indexing with Noindex, Robots.txt & Rel Attributes

Weekly Crawls

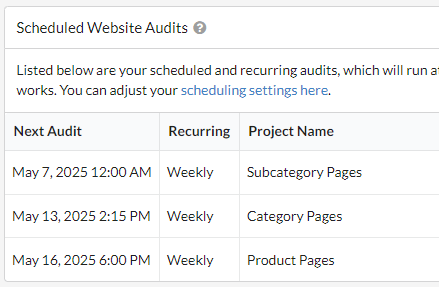

Running weekly crawls is essential. I love Sitebulb Cloud for scheduling and automating when and how specific sections of the site are crawled.

For example, before product results and the Google Shopping tab became a key focus for eCommerce SEOs (sometime in 2023), I concentrated on category URLs to fix faulty response codes at scale—primarily ensuring that out-of-stock products returned the correct status code and remained topically relevant.

However, priorities have shifted today. Product results are now a critical aspect of my weekly crawl assessments, and ensuring that Googlebot can access every product for sale is a top priority.

Key Areas of an Enterprise SEO Audit

I analyse each of these factors using advanced SEO tools and techniques to ensure a holistic approach:

Indexability & Crawlability: Ensuring Google can efficiently find and index the most important pages

An Enterprise SEO Audit should always have a clear objective or a specific problem we are trying to fix.

For example, let’s dive deeper into the indexation status of key URLs that were performing extremely well - until something interesting happened. Every Monday, we ran a weekly auto-scheduled crawl to check if any important URLs were broken or misplaced in the mega menu.

Then, one of our consultants pointed out that the trade team had mistakenly misplaced a “/” in the custom CMS framework for a group of high-trending URLs. We had to act quickly. We crawled a specific section of the site—focusing on a segmented audit based on the business-critical issue.

We knew we had to redirect those broken URLs. Fortunately, our sitemap updates automatically if a URL is broken or if no products are left (thanks to the Dev Team).

However, the internal links pointing to these broken URLs also had to be adjusted. Identifying these pages was straightforward using Sitebulb.

After three hours of working to protect internal linkage and authority, we ran another crawl to double-check that everything was correctly linking to the new pages.

Our custom log file analysis confirmed that Googlebot had started crawling the new pages, so we monitored for any further issues.

Within 48 hours of completing the audit, we checked Google Search Console (GSC) to assess changes in impressions and clicks on these newly updated pages.

The results?

We saw a 17% increase (Y-on-Y) in clicks for our target URLs. This also played a great part in our “positive” monthly Visibility Index reporting.

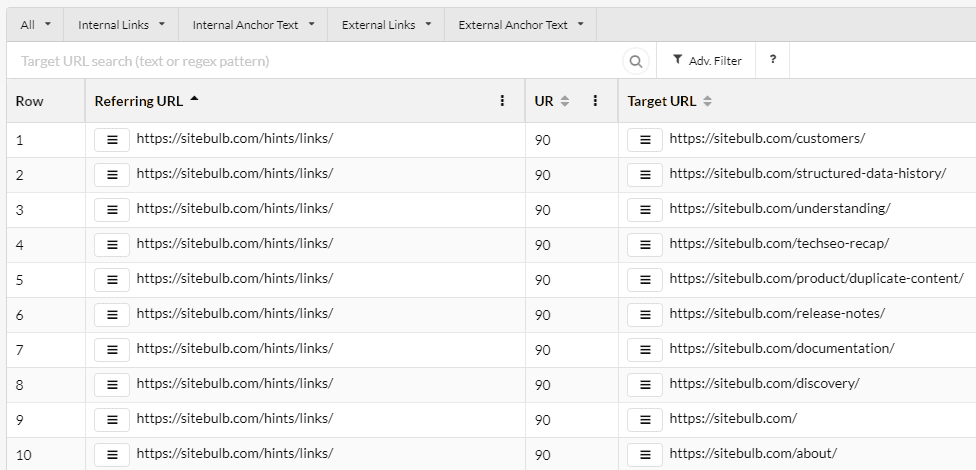

Internal Linking & Page Discoverability: Enhancing website architecture for better rankings

With enterprise websites, if you do not act quickly, new URLs will lose out - making it harder to regain previous rankings.

To ensure full recovery from this mini audit, we also increased the number of internal links by identifying relevant n-grams in our category-specific blog content.

On larger sites, every URL fits within a broader hierarchy. To maintain alignment, we always use a decision-tree system based on three key questions:

- Does the URL belong to the correct sub-category?

- Does any other URL receive links from this sub-category?

- Would the user find this anchor text useful?

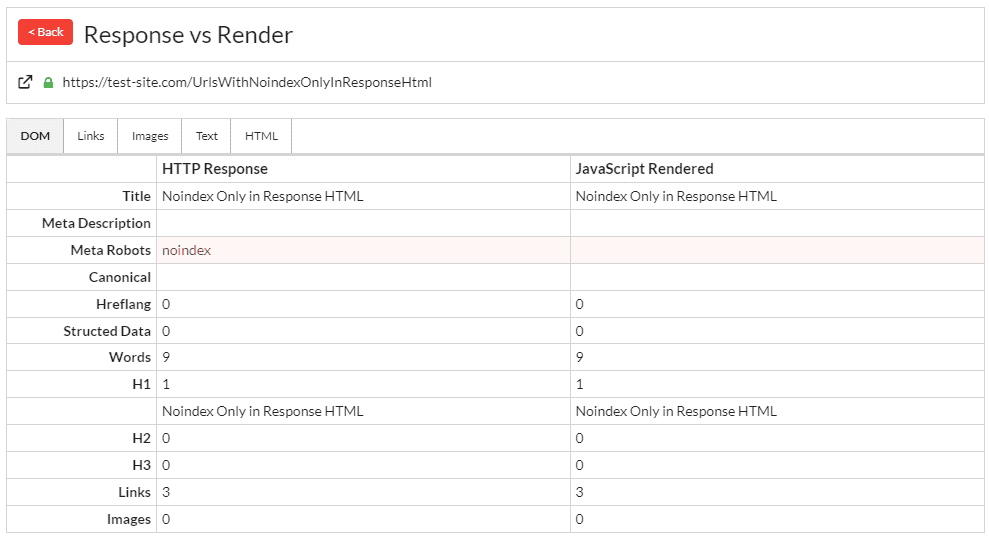

Handling Custom Frameworks & JavaScript SEO

Websites built on custom frameworks are typically JavaScript-based. It is crucial for enterprise SEOs to ensure that robots directives match both the HTML response and JavaScript rendering.

I use Chrome Inspector and the URL Inspection Tool in Google Search Console to verify this, although you can also use Sitebulb’s response vs render report. I then cross-reference if results in my weekly crawl are the same, justifying why implementations must be made.

Editor’s Note: You can find out more about JavaScript SEO here and make sure you check out Google’s guide on JavaScript.

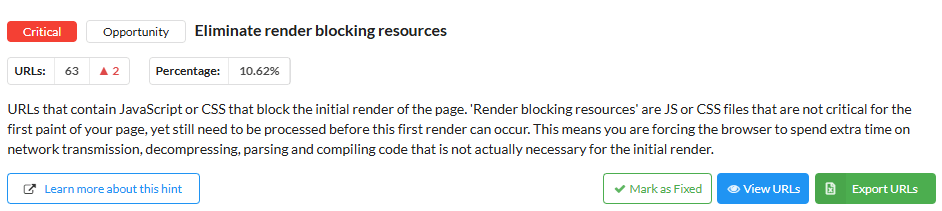

We also examine page speed elements such as ranking URLs containing JavaScript or CSS that block initial rendering (Render-Blocking Resources), which could impact indexation in the long-run.

Mini-Audits

I particularly enjoy auditing specific sections of websites. For ecommerce sites, brand reviews are a great focus area. I check whether pages have experienced traffic drops and cross-check for spikes in search volumes. If I find issues, I can pinpoint necessary changes by segmenting URLs by site section.

I am currently using several machine-learning algorithms to analyse topics and provide content writers with insights on new URLs that could be better optimised. I use a custom Python script for this. The core concept is based around BERTopic; I cluster text, and keywords into semantic topics/URLs/elements.

The Role of SEO Tools in Enterprise Audits

Many players are involved in enterprise SEO auditing, so relying on a single tool is not advisable. Your objectives must guide your enterprise SEO auditing process.

Consider: am I auditing crawls, logs, indexing, or rankings? And for which section of the site?

For example, if traffic drops for a product category, I investigate:

- Search volume fluctuations

- Duplicate content (easily checked using Sitebulb)

- Internal and external links

The websites I work with generally have extensive backlink profiles, though not necessarily healthy ones.

Using Google Search Console (GSC) to audit backlinks for enterprises is one of my favourite tasks, as I can cross-check what Googlebot sees. I then compare it to my latest automated crawl to verify whether GSC is missing recent updates.

The Importance of Communication in Enterprise SEO Audits

Working at the enterprise level can seem daunting, especially when development tickets require extensive details and approval from multiple stakeholders before reaching the lead developer.

So one of my primary responsibilities is communication. That’s right—SEO is not just about technical fixes!

I cannot simply remove a product URL because it returns a 404 status code. I must first consult the trading team to determine whether similar products will be restocked. If not, I typically implement an automatic redirect to the most relevant category URL.

Navigation menus take priority in enterprise SEO because they contain globally recognised inlinks that significantly impact rankings. Some enterprise systems automatically remove broken URLs from sitemaps, as they should.

Enterprise SEO Audit Implementation: Managing Long Waiting Times

Not every audit recommendation is implemented immediately. This is why, at the end of every audit, I provide justifications for each recommendation and tailor my communication to the relevant teams.

For example, if an audit recommendation involves metadata or content, I send an easily digestible message to the copy team. This can include something like this:

Hi {content writer’s name}, {site name; site category; site URL} could benefit from an FAQ section below the product listings. Here’s the data, please feel free to adjust this to the brand guidelines, and for the {product group} and, {category segment}:

Q1; A1

Q2; A2

Q3; A3

Top Tip: Before my independent SEO team is contracted with a content team, we have a shared file stating exact variables, this makes it easier for a content writer to just hover their mouse over {variable} to see the exact information.

Such a template speeds up the process of communication with dynamic content departments at an enterprise level.

When an audit recommendation involves broken links, I create a detailed dev ticket for the custom CMS to outline the necessary fixes. The structure of this ticket is more formal and organised compared to a content team's request, as developers typically prefer a clear and structured approach.

This ensures that the necessary action steps are well understood and actionable.

1. Highlight the Issue

Provide a Status Code Report: Include a comprehensive status code report that lists all broken links (404 errors, 410 errors, or any other error codes). This report should clearly identify the source pages (inlinks) where these links are located.

Broken Link Details: For each broken link, provide the exact URL and the destination URL that it’s supposed to point to. If applicable, include the reason for the broken link (e.g., deleted page, incorrect URL, etc.).

Reference SEO Tools: Attach the results of an SEO crawl or link checker (e.g., Sitebulb, Screaming Frog) to provide supporting evidence of the broken links.

Required Fixes: Explicitly state the action that needs to be taken—whether it's replacing, redirecting, or removing the broken link.

Example:

Broken link: /old-page

Destination page: /new-page

Error code: 404

2. State the Impact

Impact Levels: Classify the impact of the broken links as low, moderate, or high:

Low Impact: Broken links on less trafficked pages or non-critical parts of the website. These may not immediately harm SEO but should still be fixed.

Moderate Impact: Links that affect moderately important pages, such as category or product pages, where the broken links could potentially lead to reduced crawlability or user experience.

High Impact: Broken links on critical pages like homepage, important landing pages, or key transactional pages, which can significantly harm SEO performance and user experience.

Reasoning for Impact: Include the rationale for why the broken links are problematic in terms of SEO. For example, broken links on high-traffic pages may result in lost page authority, reduced crawl efficiency, and a poor user experience.

Example:

The broken link on /old-page has a moderate impact because it’s within a product category that receives significant traffic, and users may leave the site due to frustration with a broken link.

3. Acceptance Criteria

Expected Fixes: Provide a clear example of the expected result once the links are corrected. This might include:

- A link correctly pointing to the intended destination.

- A valid 200 status code for all fixed links.

- Screenshots or examples of the updated internal link structure.

- The fixed link should be tested for functionality.

Verification: After the fixes are implemented, the SEO team will verify that the broken links have been successfully resolved by crawling the pages again. If needed, a follow-up crawl report should be provided.

Example Acceptance Criteria:

Link on /category-page is corrected to point to /new-product-page and returns a 200 status code.

All previously broken links in the report now show a valid status and are fixed.

4. Additional Considerations for Higher Impact Issues

While the primary request in this case is for broken link fixes, higher-impact tickets can involve more complex issues, such as the implementation of hreflang tags.

Developers may frequently encounter tickets involving hreflang issues, particularly in enterprise-level websites managing multi-location and multilingual content.

If your issue involves more complex SEO elements, such as hreflang implementation or redirects, be sure to include more detailed instructions and expectations for handling these tasks.

Example for Hreflang Tags:

Request to ensure correct hreflang tags are in place for all relevant pages based on location and language, to avoid geotargeting issues.

Concluding Views

Enterprise SEO audits require a structured approach, strong technical expertise, and excellent communication skills. The complexity of large-scale websites demands a suitable enterprise crawler, issue prioritisation, automation, and collaboration across teams to ensure recommendations are implemented effectively.

By focusing on key areas such as crawlability, indexability, internal linking, and backlink health, businesses can enhance their search visibility and drive sustainable organic growth.

You might also like:

Konrad Szymaniak is an experienced Enterprise SEO consultant and fractional SEO Director, renowned for developing scalable processes for large digital teams. Since 2019, he has partnered with industry leaders such as Frasers Group and Southampton Business School to deliver tailored strategies that maximise revenue through search engines.

Articles for every stage in your SEO journey. Jump on board.

Related Articles

JavaScript SEO AMA with Sam Torres: 13 Questions & Answers

JavaScript SEO AMA with Sam Torres: 13 Questions & Answers

Beyond the Basics: How to Turn Crawl Data Into Strategic Actionable SEO Insights

Beyond the Basics: How to Turn Crawl Data Into Strategic Actionable SEO Insights

These WordPress Website Mistakes Could Hurt Your Brand’s Credibility

These WordPress Website Mistakes Could Hurt Your Brand’s Credibility

Sitebulb Desktop

Sitebulb Desktop

Find, fix and communicate technical issues with easy visuals, in-depth insights, & prioritized recommendations across 300+ SEO issues.

- Ideal for SEO professionals, consultants & marketing agencies.

Try our fully featured 14 day trial. No credit card required.

Try Sitebulb for free Sitebulb Cloud

Sitebulb Cloud

Get all the capability of Sitebulb Desktop, accessible via your web browser. Crawl at scale without project, crawl credit, or machine limits.

- Perfect for collaboration, remote teams & extreme scale.

If you’re using another cloud crawler, you will definitely save money with Sitebulb.

Explore Sitebulb Cloud Konrad Szymaniak

Konrad Szymaniak