Disallowed URL in XML Sitemaps

This means that the URL in question is disallowed in robots.txt, yet is included in an XML Sitemap.

Why is this important?

Your XML Sitemap should only contain URLs you wish for search engines to index. If a URL is disallowed, this means that search engines are unable to crawl and properly index the content.

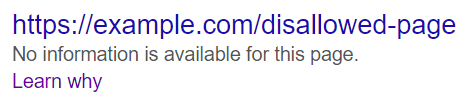

As such, including a disallowed URL in a sitemap provides conflicting information to search engines, and may mean that pages end up getting indexed that you do not wish to be indexed, which will typically end up looking like this in the search results:

What does the Hint check?

This Hint will trigger for any internal URL which matches a disallow rule in robots.txt, yet is also included in an XML Sitemap.

Examples that trigger this Hint:

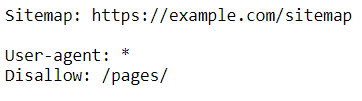

Consider the URL: https://example.com/pages/page-a, which is included in a submitted XML Sitemap.

The Hint would trigger for this URL if it matched a robots.txt 'disallow' rule, for example:

How do you resolve this issue?

The current setup is technically incorrect, and may cause indexing issues.

To resolve it, you would need to do one of the following:

- If the URL should be disallowed, then remove it from all XML Sitemaps. Once removed, resubmit the sitemaps on Google Search Console.

- If the URL should not be disallowed, adjust or remove the corresponding disallow rule from the robots.txt file.

Further Reading

Sitebulb Desktop

Sitebulb Desktop

Find, fix and communicate technical issues with easy visuals, in-depth insights, & prioritized recommendations across 300+ SEO issues.

- Ideal for SEO professionals, consultants & marketing agencies.

Sitebulb Cloud

Sitebulb Cloud

Get all the capability of Sitebulb Desktop, accessible via your web browser. Crawl at scale without project, crawl credit, or machine limits.

- Perfect for collaboration, remote teams & extreme scale.