}

}

Accessibility is the Future of Search

Published 15 November 2022

Welcome to the final installment of a 4-part blog series about search engine optimization (SEO) and its interconnectivity with accessibility ('A11Y' - because there are eleven characters between the 'A' and the 'Y'). With this series, Patrick Hathaway from Sitebulb and Matthew Luken from Deque Systems will each write complementary articles on the subject from their unique points of view.

In this series so far, we have been trying to make the argument that SEOs should care more about website accessibility.

The three articles that precede this one cover:

- Intro to Why Good SEO Supports Accessibility

How we as SEOs are being pushed to consider UX - and accessibility is a subset of UX - and the sheer scale of the opportunity that improved accessibility represents. - Accessibility and SEO are Symbiotic

How the technology that powers website crawlers is so similar to how screen readers work, and why the symbiotic nature of SEO and accessibility means that they are mutually beneficial. - 7 Tactics That Benefit Both SEO and Accessibility

The specific, foundational actions you can take on your website to improve accessibility, and why these are also beneficial to SEO.

Finally, we are looking to the future. This article explores recent technological developments to understand how this will change the future of search, and what that means from an accessibility standpoint. Matthew's counterpart article explores what new technologies mean for disabled users and an aging search audience.

The future of search

While it is both difficult and foolhardy to make proclamations about the future of SEO, it is abundantly clear that Google are putting their eggs in the 'mobile' basket. And while some users are starting to explore different types of platform to find answers to their questions, when it comes to search engines, Google is and will remain the dominant force.

One of Google's aims is to make the internet accessible to all, which they have recently demonstrated with a $1 billion investment into Africa's digital transformation. What we are beginning to see, as connectivity increases across the continent, is that millions of people are coming online for the first time ever with mobile as their first experience of the internet. These people have never even seen a QWERTY keyboard before, so to encounter one on a phone is a rather strange experience.

As of April 2022, there were 5 billion internet users worldwide, which is 63% of the global population. This means there are almost 3 billion people yet to come online, whose first experiences will also be 'mobile first.' If Google really does want to make the internet universally accessible, they need to embrace a world where a keyboard is not the primary input method.

Searching with your voice

One of the ways in which Google are looking to solve this problem is by making it possible to interact via voice search: recognising speech patterns and transforming voice signals into text (as we have covered in previous articles, search algorithms' dependance upon textual content is deeply rooted).

The advances in Natural Language Processing (NLP) have exploded the industry in recent years. NLP is a branch of artificial intelligence, which uses machine learning to train a neural network on large amounts of data, focused on the recognition and understanding of natural language.

This year, Google released LambDa, a large language model for chatbot applications. The AI was so advanced that Blake Lemoine, a Google engineer, thought it had become sentient.

"If I didn't know exactly what it was, which is this computer program we built recently, I'd think it was a 7-year-old, 8-year-old kid that happens to know physics," said Lemoine, 41.

Despite this worrying portent, the advances in NLP are beginning to revolutionize how Google can interpret and respond to search queries.

Of course, NLP plays directly into voice search, and Google are already using NLP for;

- Speech recognition (speech-to-text and text-to-speech)

- Recognizing grammatical information

- Understanding the function of individual words in a sentence (subject, object, verb, article, etc…)

- Understanding sentence structure and how that affects the underlying meaning

In May 2021, Google announced a powerful new language-based technology called Multitask Unified Model (MUM), which can understand language and is trained across 75 different languages.

It can also understand information across different formats simultaneously, so it can combine text and image inputs to build a better understanding of your query. Google are still in the early days of exploring MUM, but they claim this sort of query is something you will be able to do:

"Eventually, you might be able to take a photo of your hiking boots and ask, "can I use these to hike Mt. Fuji?" MUM would understand the image and connect it with your question to let you know your boots would work just fine. It could then point you to a blog with a list of recommended gear."

Their claims for the potential of MUM also include audio and video inputs:

"And MUM is multimodal, so it understands information across text and images and, in the future, can expand to more modalities like video and audio."

In and of itself, voice search helps from an accessibility perspective, enabling search functionality for users with mobility issues and those who find it difficult to type.

Furthermore, Google have recently joined forces with other tech giants in support of the Speech Accessibility Project, to create datasets of impaired speech that can help accelerate improvements to automated speech recognition (ASR) for those with non-standard speech patterns.

This includes speech affected by Lou Gehrig's disease or Amyotrophic Lateral Sclerosis, Parkinson's disease, cerebral palsy, and Down syndrome.

The aim is to collate a more diverse machine learning dataset that can train speech recognition models to recognise a greater diversity of speech.

Searching with your camera

Machine learning advances are also being used to power visual search, through Google Lens, which is an AI-powered technology that works via your smartphone camera, to not only identify but also interpret an object in front of the camera lens and provide vertical search results like images, translation and shopping.

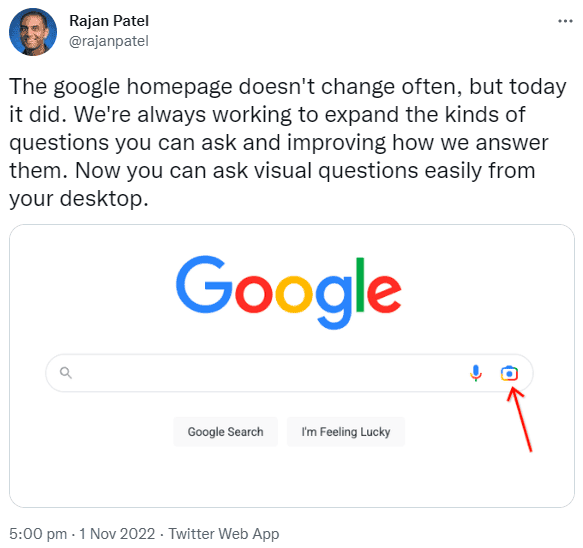

Google have started to double down on their Lens integration, as demonstrated recently by the addition of a 'Lens' button directly on the homepage:

Earlier this year they also launched the beta for their MUM powered 'multisearch' for Google Lens, which allows you to extract an object from an image, then add additional metadata to include in the search.

For example, you find a dress in a store that you like the style of, but want a different colour:

- Scan the dress with Google Lens

- Click 'Add to search' and type in the colour you are looking for

- See results for the dress style and the colour that you want

Google Lens is also now more tightly integrated Google Photos, which plugs straight into Shopping results. Now, when you view any screenshot in Google Photos, there will be a suggestion to search the photo with Lens, allowing you to see search results that can help you find that pair of shoes or wallpaper pattern that caught your eye:

This sort of experience is powered by their Shopping Graph:

"The Shopping Graph is a dynamic, AI-enhanced model that understands a constantly-changing set of products, sellers, brands, reviews and most importantly, the product information and inventory data we receive from brands and retailers directly - as well as how those attributes relate to one another."

Sound familiar? It's like the Knowledge Graph but specifically for ecommerce results, and considers information like:

- Price

- Website

- Reviews

- SKU and inventory data

- Videos

In our last post we covered the role of structured data for both SEO and accessibility, and this shows specifically how it can tie into commercial results and image search.

By allowing users to search based on their visual surroundings, this can remove barriers for users with low vision or visual impairments. Google Lens - and similar technology - can help with reading, object identification and translation, in addition to search-based activities like research and shopping.

In this Google Lens review on Perkins School for the Blind, the reviewer notes,

"I love showing it to people with newly diagnosed low vision because it can be a game changer for how they access items in their environment."

At their 2022 I/O event, Google announced how they can use technology to remove further barriers, with a teaser video of their upcoming AR glasses that seem truly remarkable. Combining the power of natural language processing, transcription and translation, the AR glasses demoed in the video show language translation happening in real time.

It is worth watching the video, if you have not seen it already, which shows a prototype version of the AR glasses being used to allow a mother who only speaks Mandarin to communicate with her English-speaking daughter, and ends with a genuinely moving scene of a hearing-impaired mother seeing her daughter talk.

What does the future hold for accessibility and SEO?

We know that the future of search is tied to mobile, and to some extent, to voice search. It goes without saying that the advancement of voice search is a positive when it comes to web accessibility, as it enables users who have difficulty typing to access the search experience.

By putting image search front and centre, Google is not only signposting their intentions and their expectations for the direction of search, but also their confidence in their machine learning models to do a satisfactory job of object classification. This technology has the potential to change how visually impaired users interact with the world around them.

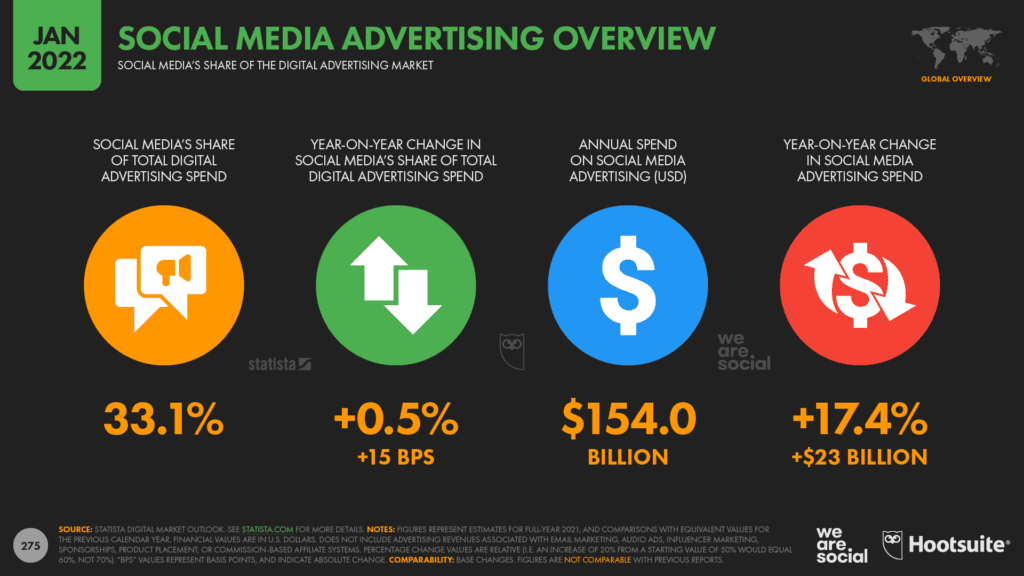

But even if we set accessibility aside, the commercial argument itself is compelling enough. Google have the data about how much time we are all spending on our phones, and they are well aware that social media platforms have become part of the purchasing journey.

If you're looking for a how-to video, Google doesn't want you to go straight to TikTok or YouTube. If you're looking for an inspirational image, they don’t want Pinterest and Instagram to be the only places you consider.

Google wants to be the place we all go to for answers - for all types of query and in all formats - and they want to provide satisfactory answers for as many users as possible.

Optimizing for accessibility is optimizing for users - all users - and this is the direction that Google is heading.

What should your brand do next?

The future of search is intertwined with AI and machine learning, powering new ways and means for users to explore the internet and the world around them.

Search algorithms have already become so complex that no one single person could ever explain how all the various algorithms interact, and they are only obscured further by the role of AI and machine learning.

AI and machine learning are about taking unstructured data and trying to apply structure to it to recognise patterns and make inferences.

But what powers the machine learning algorithms?

Data training sets.

Machine learning starts out with large, properly labeled data sets and builds classification models from the data, which can then be applied to previously unseen data. Based on the input data, they can develop predictive models, group and interpret data.

By properly labeling your data, utilizing semantic HTML markup, and providing accurate structured data, you can appeal to these machine learning algorithms, clearly demonstrating the very patterns they have been taught to recognise.

As we've outlined before, these practices make content consumption easier for assistive tech like screen readers, thus benefiting SEO and accessibility (remember, they are symbiotic).

And when it comes to natural language processing, this is about understanding what your users are actually searching for and optimizing your content accordingly. It is about optimizing for users.

Website accessibility is not a ranking factor, and it maybe never will be. But that isn't the point. The point is that technology will change how people access the internet in the first place. Will you be ready for it?

We hope you have found this series on accessibility and SEO useful and enjoyable. If so, please be kind enough to share it with your friends, colleagues, and your network. And of course, don't forget to also read Matthew's counterpart article over on Deque!

About Matthew

Matthew Luken is a Vice President & Principal Strategy Consultant at Deque Systems, Inc. For the four years prior to Deque, Matthew built out U.S. Bank's enterprise-level Digital Accessibility program. He grew the team from two contractor positions to an overall team of ~75 consultants and leaders providing accessibility design reviews, automated / manual / compliance testing services, defect remediation consultation, and documentation / creation of best practices. In this program there were 1,500+ implementations of Axe Auditor and almost 4,000 implementations of Deque University and Axe DevTools. Also, Matthew was Head of UXDesign's Accessibility Center of Practice where he was responsible for creating seamless procedures and processes that support the digital accessibility team's mission & objectives while dovetailing into the company's other Center of Practices like DEI, employee-facing services, Risk & Compliance, etc. He and his team's work has been recognized by American Banker, Forrester, Business Journal, and The Banker. In his user experience and service design backgrounds, Matthew has worked with over 275 brands around the world covering every vertical and category. He continues to teach User Experience, Service Design and Digital Accessibility at the college-level, as well as mentor new digital designers through several different mentorship programs around the USA.

About Deque Systems

Deque (pronounced dee-cue) is a web accessibility software and services company, and our mission is Digital Equality. We believe everyone, regardless of their ability, should have equal access to the information, services, applications, and everything else on the web.

We work with enterprise-level businesses and organizations to ensure that their sites and mobile apps are accessible. Installed in 475,000+ browsers and with 5,000+ audit projects completed, Deque is the industry standard.

Patrick spends most of his time trying to keep the documentation up to speed with Gareth's non-stop development. When he's not doing that, he can usually be found abusing Sitebulb customers in his beloved release notes.

Patrick Hathaway

Patrick Hathaway