How to Optimize Your Crawl Budget: Insights From Top Technical SEO Experts

Published May 19, 2025

Massive thanks to Aishat Abdulfatah for this guide on crawl budget optimization. Learn 8 expert-proven strategies for optimizing your crawl budget.

You cannot simply force a Google crawl. Neither can you map out exactly which pages and in which order Google should crawl your website.

If you are a technical SEO who manages a large or rapidly changing website, this lack of control over Google’s crawling makes it tough to ensure only the search-relevant and up-to-date pages on your website are crawled.

Since you can't know the exact URLs Google’s crawlers will move on, you risk wasting your crawl budget on pages that don't contribute to your website’s performance on search. This can leave your newly published and updated pages not crawled and not indexed.

Which begs the question: How can you make the most of your crawl budget in a situation like this?

I dissected this question with 4 technical SEO experts who have spent decades optimizing crawl budgets for websites of varying sizes and from various industries. This article shares their thoughts on how to strategically guide Google to prioritize (and crawl) your most valuable content.

Let’s get crawling.

Contents:

- What is Crawl Budget?

- Should You Worry About Your Crawl Budget?

- 8 Strategies to Optimize Your Crawl Budget

What is Crawl Budget?

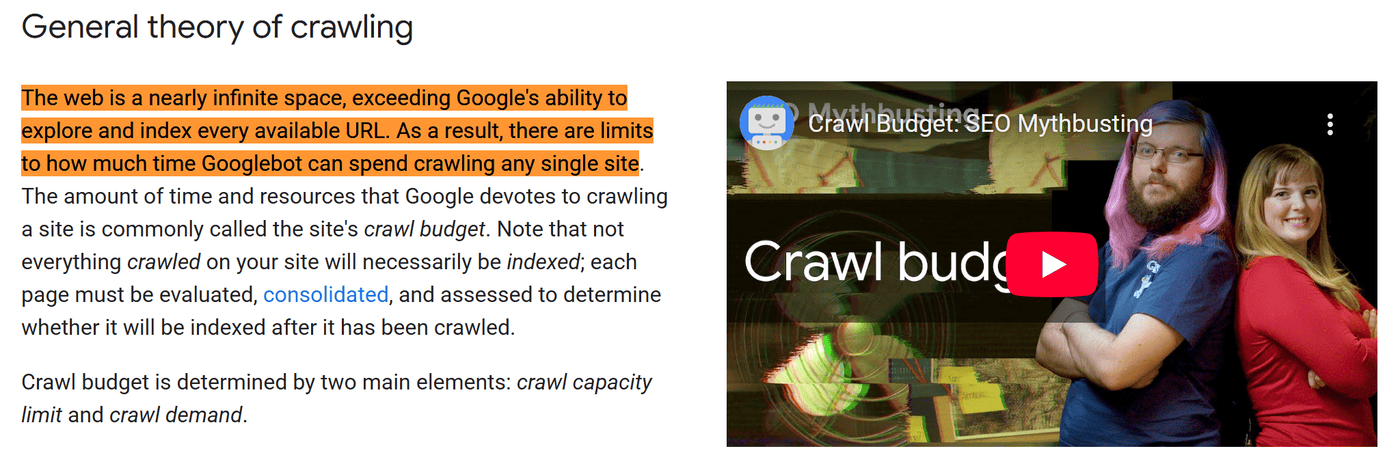

Crawl budget is the number of indexable URLs on your website that Google crawls within a particular time frame, usually 24 hours. This number represents how much crawl resource Google is willing to dedicate to your website.

Your website’s crawl budget depends on two things:

- Google’s perception of how often searchers wish to see your content (crawl demand)

- How well Google crawlers can interact with your website without hampering its functionality (crawl capacity limit)

Google allocates crawl budget because, “The web is a nearly infinite space, exceeding Google's ability to explore and index every available URL. As a result, there are limits to how much time Googlebot can spend crawling any single site.”

So, every website’s crawl budget quantifies how much time and resource Google wishes to dedicate to it.

While your crawl budget can fluctuate (e.g. during a website migration) or gradually increase (e.g. as your website becomes more authoritative and your crawl demand increases), it is often fairly stable over a period of time.

Should You Worry About Your Crawl Budget?

If your website has less than 10,000 pages and you don't update your content pages frequently, you shouldn't fret about your crawl budget. Google assures that “merely keeping your sitemap up to date and checking your index coverage regularly is adequate.”

According to this guide from Google, crawl budget optimization is mainly for websites that are:

- Large (1 million+ unique pages) with content that changes moderately often (once a week)

- Medium in size (10,000+ unique pages) with very rapidly changing content (daily)

- Among sites with a large portion of their total URLs classified by Search Console as Discovered - currently not indexed

If your website falls within these categories, you must frequently track and optimize your crawl budget to ensure only relevant and updated content pages are crawled, indexed, and displayed on search.

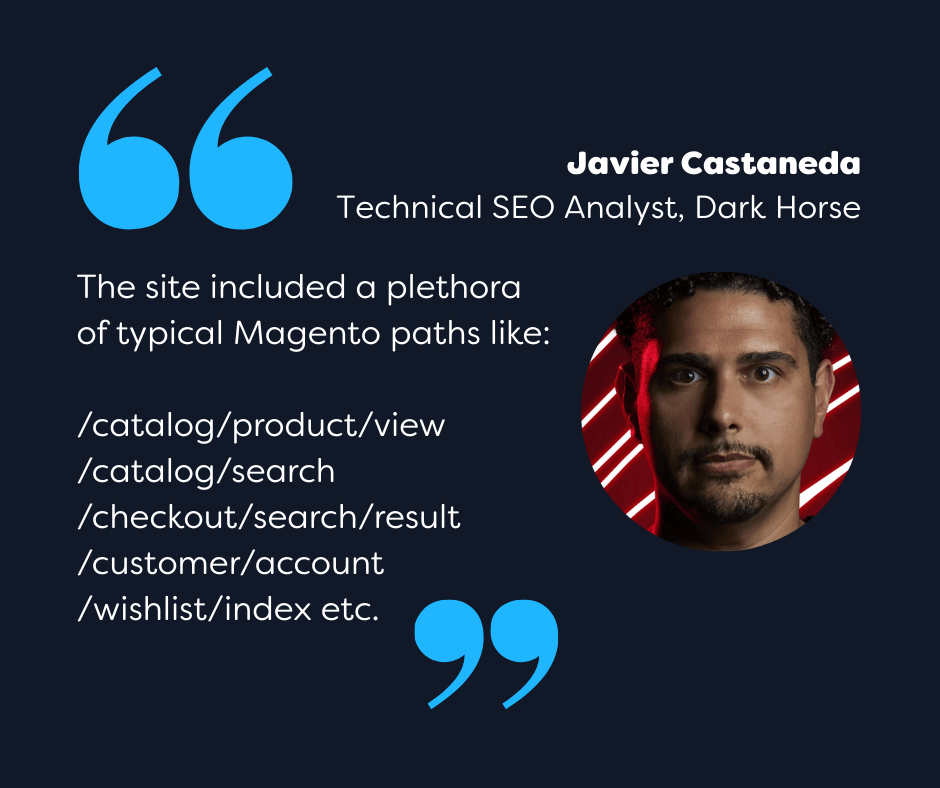

“One way to ensure you focus on this,” advises Javier Castaneda, Technical SEO Analyst at Dark Horse, “is to remind yourself that every crawl request spent on low-value, parameterized, or duplicated content is a lost opportunity to have high-value, traffic-driving pages crawled and indexed.”

Meaning—if you don’t want your low-value pages to keep getting crawled instead of the important ones, you should take crawl budget optimization seriously.

“One way to ensure you focus on this is to remind yourself that every crawl request spent on low-value, parameterized, or duplicated content is a lost opportunity to have high-value, traffic-driving pages crawled and indexed.”

8 Strategies to Optimize Your Crawl Budget

You might be unable to request a crawl budget increase from Google but you can maximize what you have by optimizing your crawl budget. Here are 8 expert-proven strategies you can adopt.

1. Get rid of duplicate content

Duplicate content leads to crawl waste by causing Googlebot to expend crawl requests on pages with the same or similar content. Plus, if these pages are crawled and indexed, they might be displayed together in search, confusing your readers and leading to poor user experience.

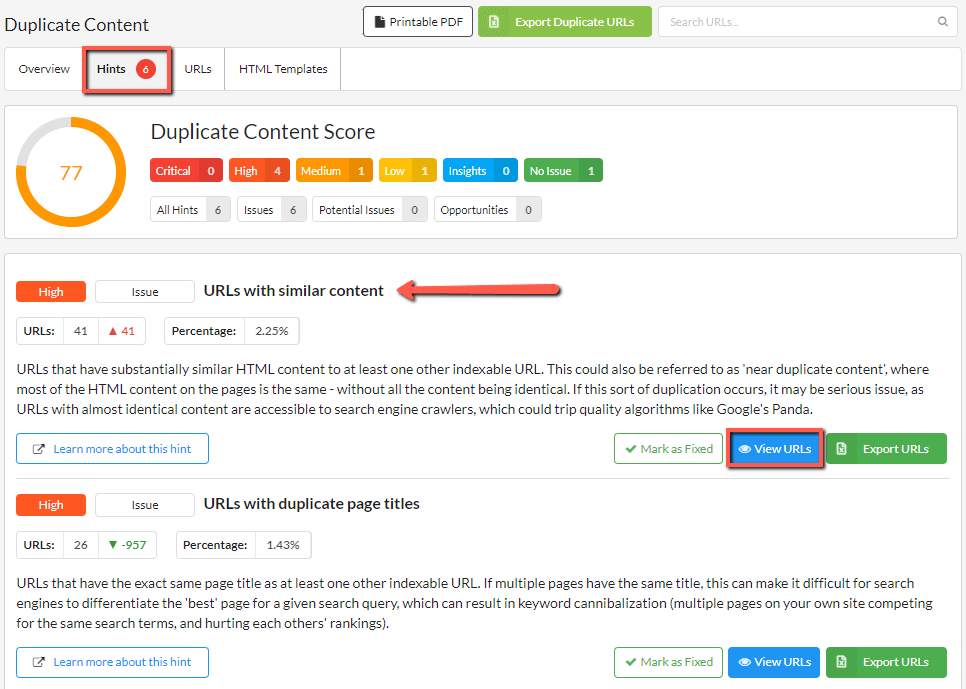

Javier told me that he was once tasked with auditing a Magento e-commerce website that was not properly displaying new products on search. After running a comprehensive audit with Sitebulb, he discovered that the website was plagued by duplicate content issues caused by unnecessary parameterized URLs and automatically generated pages.

These duplicate content pages had been indexed without a clear purpose, leading to redundant versions of product listings and unnecessary crawling depth. With Sitebulb’s duplicate content checker report, he was able to visualize all the duplicate content pages and get clear pointers on what to do with them.

Following these Sitebulb tips, he consolidated the duplicate URLs and used rel=canonical to point Google towards the preferred versions. Within two months, the crawl rate for these redundant URLs decreased by about 35%, while traffic to canonicalized product pages increased by around 12%.

According to Javier, this result means that “Google spent less time crawling irrelevant or duplicate pages and more time on higher-value content, leading to faster indexing of newer products and better use of crawl budget.”

Since the crawl budget that was previously wasted on duplicate content was now freed up for crawling important product pages, it was not a surprise that after these changes, newer products started ranking within hours of being added to the website.

2. Make use of your robots.txt file

Your robots.txt file signals to Googlebot which URLs you don't want it to crawl using ‘disallow’ rules. You can use this file to prevent URLs with low SEO value (e.g. API endpoints, feed URLs, and checkout pages) from being crawled and wasting your crawl budget.

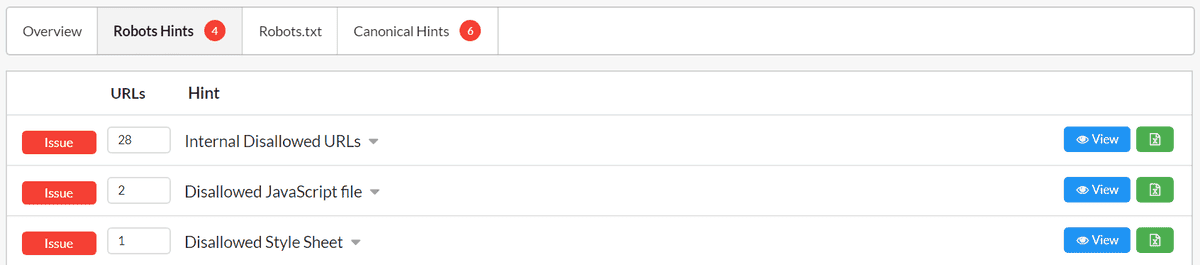

Using Sitebulb’s indexability check, Javier discovered—on the same Magento e-commerce website—several backend paths and low-value URLs that were being crawled but not providing any ranking value.

So, he updated the robots.txt file to block these paths from being crawled by Google.

After implementing these changes, Google Search Console indicated fewer "Discovered - Currently Not Indexed" statuses, meaning Google was no longer using crawl budget on these lower-value sections.

“Important pages were also being crawled and indexed faster,” noted Javier, “with time to indexing reduced by approximately 25% for new products and collections.”

Essentially, by blocking Googlebot from accessing pages that were better off not being crawled, Javier was able to salvage the crawl budget waste.

Read more: Improving Crawling & Indexing with Noindex, Robots.txt & Rel Attributes

3. Reduce your redirect chains

Redirects can be a double-edged sword. While they are necessary to direct crawlers and users to the right website content, too many of them, especially in one crawl request, can make Google feel like your content is too far to reach and abandon crawling. And when abandoned crawls pile up on your website, Google sends fewer crawl requests and your crawl budget reduces.

That’s why Vukasin Ilic, SEO specialist and founder of Linkter, advises that you have “no more than 2 redirect pages and to always aim for only 1.” This is because when your redirect chain is 3 or more, you risk scaring Google into abandoning a crawl.

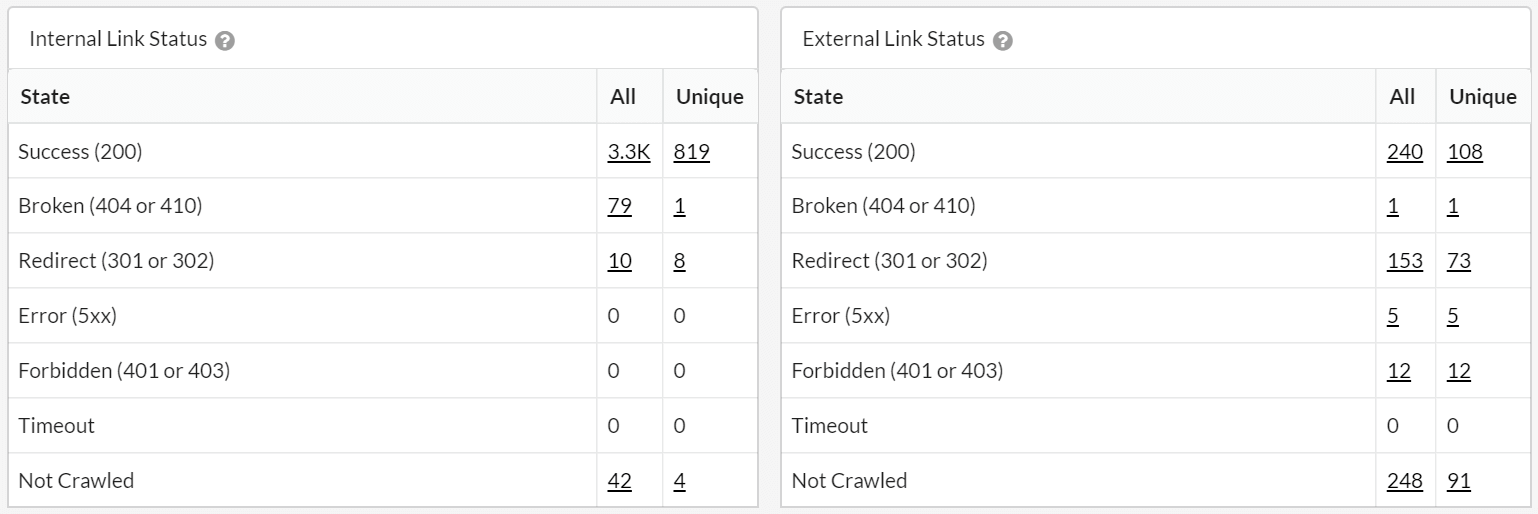

On one website Javier worked on, after running an audit in Sitebulb, he found multiple 404s and 301s redirect chains.

Following Sitebulb’s suggestions, he fixed all the broken links and consolidated the redirects where possible. He also added custom error pages to guide both crawlers and users effectively.

These corrections resulted in a noticeable decrease in redirect chains—from an average of 5 hops down to just 1 hop in most cases.

“After this,” Javier added, “the pages that had been buried due to these chains started appearing in the Google index, and the Crawl Stats report in Google Search Console showed a more even crawl distribution.”

This meant that the pages Google abandoned due to multiple redirect chains were finally being crawled and were no longer wasting crawl requests and crawl budget.

Read more: The Ultimate Guide to 301 Redirects (& 302) for SEO

“After consolidating redirects, the pages that had been buried due to these chains started appearing in the Google index, and the Crawl Stats report in Google Search Console showed a more even crawl distribution.”

4. Do internal linking right

Internal links are to your website what doors are to apartment rooms. They lead from and connect different pages to one another. These connectors are important for both users and Googlebot to navigate your website easily. Without them, Googlebot has to find every page individually, spending considerably more time. This can make your content appear hard to reach, waste your crawl attempts, and provoke a crawl budget reduction.

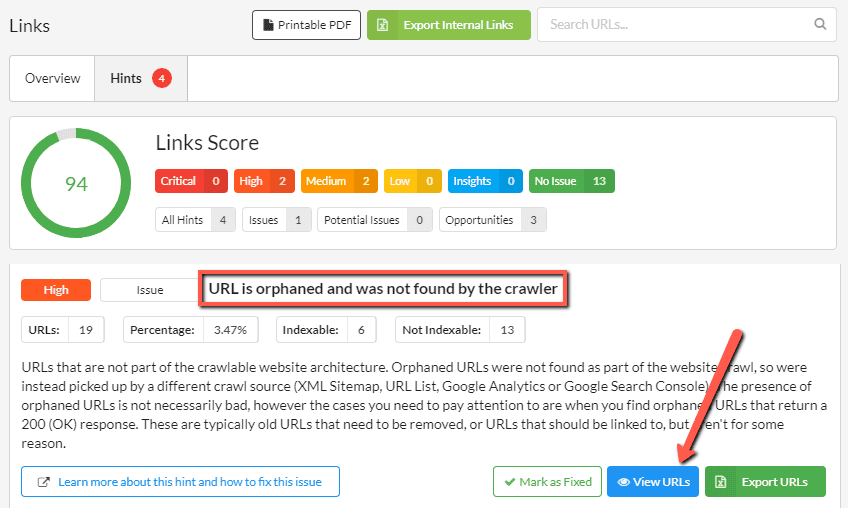

So, having orphaned pages—pages that no other page links to—is like throwing away your pass to having Google easily crawl your website.

To spot your orphaned pages, connect Sitebulb’s crawler to your Google Analytics, Google Search Console, or XML sitemaps before you run an audit. That way, any URL not linked internally but present in these sources will be reported in your crawl. After the audit, you can find these orphaned URLs in the audit overview, link Hints, or URL reports.

After finding your orphaned pages, it’s time to add some internal links. A handy tip for better success at this is to be strategic and link from your highest-ranking website pages. That way, your orphaned pages can receive a significant traffic boost.

Javier told me about a Sitebulb audit he performed on a WordPress blog where he found many orphaned pages that contained content critical to his target keywords. So, he linked contextually to these orphaned pages from relevant high-authority pages to strengthen their discoverability.

Within a month, these orphaned pages (that weren’t ranking before) began receiving impressions in the SERPs. This meant that they were finally being discovered, crawled, and indexed.

Read more: How to Find & Fix Orphan Pages

5. Monitor your server log files

Server log files are documents that capture every event related to your server like user requests, access times, errors, etc. When you monitor your server log files, you can clearly see what users and crawlers experience when they interact with your website.

This means you can spot crawl budget-wasting events like:

- Low-value pages being crawled

- Pages taking too long to render information

- Important pages being bypassed by Googlebot

- Pages with sensitive website information being crawled

You can extract your server log files from web servers and process them using dedicated log file analyzers like AWStats. When Javier did this for a content-heavy blog he was auditing, he found that some low-value pages were getting repeatedly crawled.

To get a better picture of what crawlers were experiencing, he used Sitebulb’s crawl visualization to understand where those pages sit within the site architecture and how internal links were contributing to the crawl behaviour.

Based on these insights, he implemented changes such as:

- Adding noindex tags to thin or low-value pages

- Redirecting Googlebot to more relevant category or pillar URLs

- Adjusting internal links to consolidate crawl equity around strategic content

Soon after, he reported that server log analysis showed a drop of around 40% in Googlebot requests for low-value pages, freeing up crawl budget. As a result, traffic to pillar content increased by 20% over the next quarter since these pages received more consistent crawl attention.

6. Organize your query parameters

Query parameters, while necessary to customize and provide contextual website responses, can waste your crawl budget when there are no indications to Googlebot about whether they are separate pages or variations of a main URL. So when they are indeed variations (which is more often the case), Googlebot can crawl each one of them and waste your crawl budget.

To avoid this, use Sitebulb to organize your query parameters by canonicalizing them or blocking them from being crawled.

On a WooCommerce store Javier audited using Sitebulb, he found that product category URLs had dozens of parameterized versions being crawled (e.g. ?sort=price or ?filter=color). To correct this, he used Sitebulb to identify the URLs and canonicalized or blocked them via robots.txt.

“Within three months”, Javier reported, “I noticed that Google's crawl activity on the site became more focused, with fewer parameterized URLs appearing in server logs.”

This reduction allowed Google to spend its crawl budget on other, more valuable product and category pages. Crawl efficiency increased, and the pages with valuable content were indexed more frequently.

7. Update your sitemap(s)

An updated sitemap is Googlebot’s best teammate. Together, they can do a finer job at finding and crawling your website’s most important URLs so your crawl budget is spent on pages with the most SEO value.

Sitebulb helps optimize this process by ensuring, as Chris Lever, Head of Technical SEO at Bring Digital puts it, “that our sitemap is in sync with our site”.

Using Sitebulb’s XML sitemap checker, you can run sitemap audits to ensure only the right URLs are in your sitemap. And perchance you spot unruly URLs like 404s, redirects, noindexed URLs, or orphan URLs, Sitebulb offers detailed suggestions on handling these issues.

Read more: The Ultimate Guide to XML Sitemaps for SEO

8. Enhance your server infrastructure

While optimizing your website structure so Googlebot can discover the most important pages first and fast, you must also keep in mind the other prong to effective crawling—your website server being able to handle Google’s crawl requests without crashing or dilly-dallying.

As Gary Illyes, SEO Analyst at Google, shares in this episode of Search Off the Record, “The upper limit of crawl budget is determined by what the server tells us about how much it can handle. At one point, we would crash the server and we wouldn’t be able to connect to it. That would be a very clear signal that we have to slow down.”

But if slowing down isn’t an option for you—for instance, if you run a news site, a review platform, or an e-commerce store with a large product catalog—upgrading your server infrastructure is the next step towards significantly improving crawling efficiency and optimizing your crawl budget.

Upgrading your server resources will accelerate your website response times, increase your crawl capacity limit, and keep crawl abandons and crawl waste at bay.

Become Google’s Website Tour Guide

Crawl budget optimization is a bit like how a tour guide knows a city better than a tourist. You are more familiar with your website’s layout than Google. So, to ensure your site is crawled properly, act as a website tour guide for Googlebot and divert its attention to content that provides the most value to your SEO efforts.

“This,” according to Javier, “ensures every byte of crawl budget is used on pages that matter.”

Keep in mind though—crawl budget optimization isn't a perfect science. As Barry Adams of Polemic Digital comments, “no one thing can improve crawl efficiency in one easy step. It's about doing all the right things and making small marginal improvements.”

However, when you pair the strategies we've discussed with an advanced website crawler like Sitebulb, you are firmly set on the road to zero crawl waste.

“No one thing can improve crawl efficiency in one easy step. It's about doing all the right things and making small marginal improvements.”

You might also like:

Aishat Abdulfatah writes thought leadership content for B2B SaaS brands that have unique insights to share. She pairs her writing flair with brand experts’ wealth of knowledge to create long-form content that readers love, bookmark, and send to their peers. When she's not writing, she's either reading a novel or catching up on newly released K-dramas. To learn more, connect with her on LinkedIn.

Articles for every stage in your SEO journey. Jump on board.

Related Articles

JavaScript SEO AMA with Sam Torres: 13 Questions & Answers

JavaScript SEO AMA with Sam Torres: 13 Questions & Answers

These WordPress Website Mistakes Could Hurt Your Brand’s Credibility

These WordPress Website Mistakes Could Hurt Your Brand’s Credibility

Advanced SEO Guide to Rendering: How to Debug, Test & Control What Google Sees

Advanced SEO Guide to Rendering: How to Debug, Test & Control What Google Sees

Sitebulb Desktop

Sitebulb Desktop

Find, fix and communicate technical issues with easy visuals, in-depth insights, & prioritized recommendations across 300+ SEO issues.

- Ideal for SEO professionals, consultants & marketing agencies.

Try our fully featured 14 day trial. No credit card required.

Try Sitebulb for free Sitebulb Cloud

Sitebulb Cloud

Get all the capability of Sitebulb Desktop, accessible via your web browser. Crawl at scale without project, crawl credit, or machine limits.

- Perfect for collaboration, remote teams & extreme scale.

If you’re using another cloud crawler, you will definitely save money with Sitebulb.

Explore Sitebulb Cloud

Aishat Abdulfatah

Aishat Abdulfatah