Get to the bottom of any indexing issue

Sitebulb’s Indexability Check Report will help you untangle even the most complex indexing setups, giving you a clear understanding of anything that is going wrong. Whether it’s an over-zealous robots.txt file, conflicting noindex rules, or misplaced canonical tags, Sitebulb will alert you to any configuration issues.

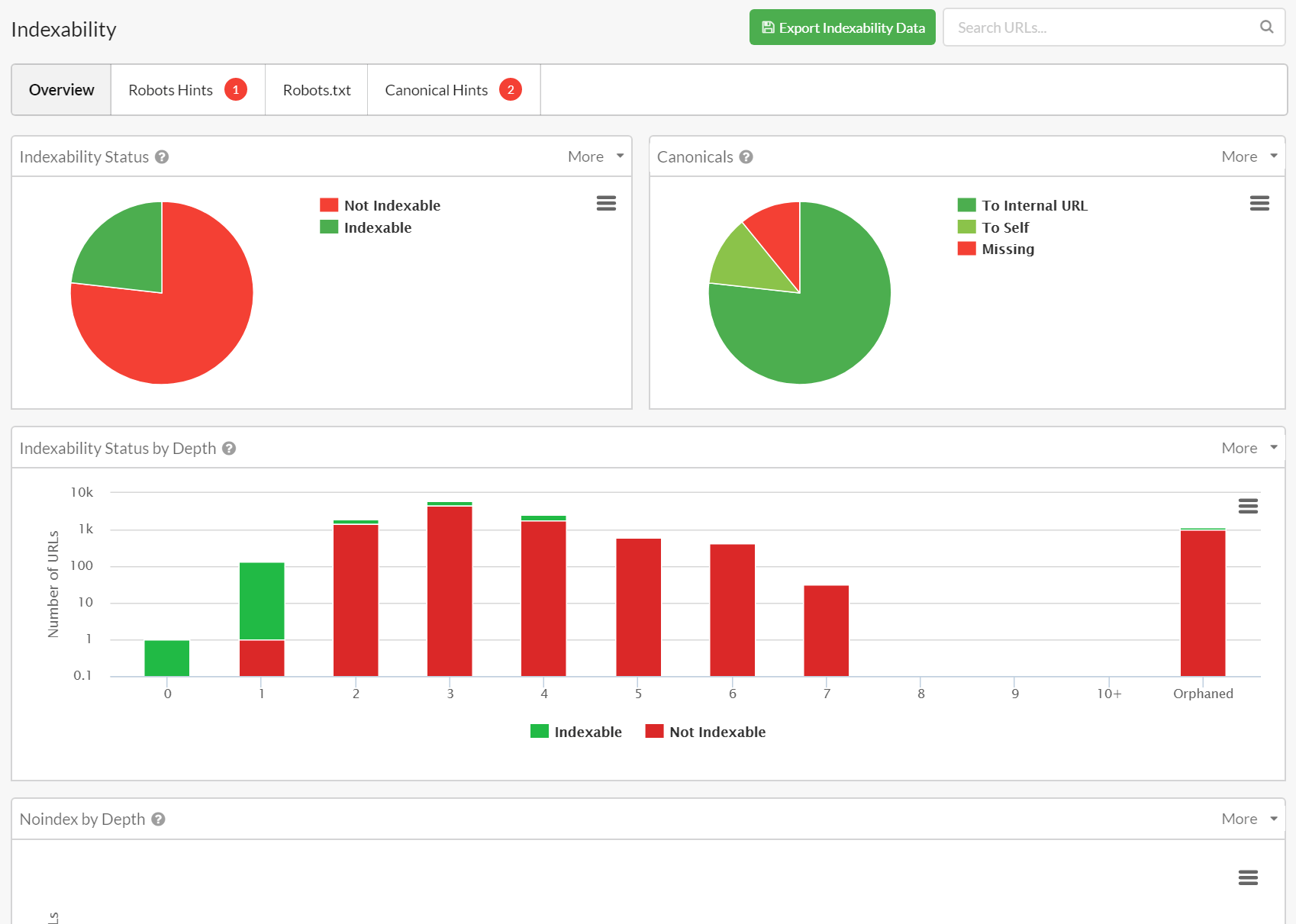

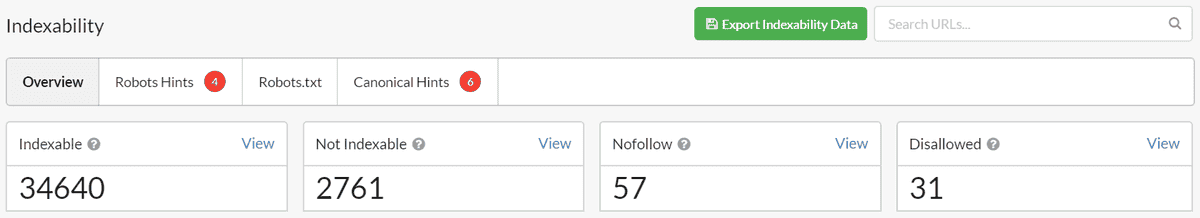

See how robots directives impact indexability

Sitebulb breaks down indexing signals using language you can understand, splitting crawled URLs into two camps: Indexable and Not Indexable. Once you've identified an error or potential problem, you can dig in further to check noindex directives or canonical tag issues.

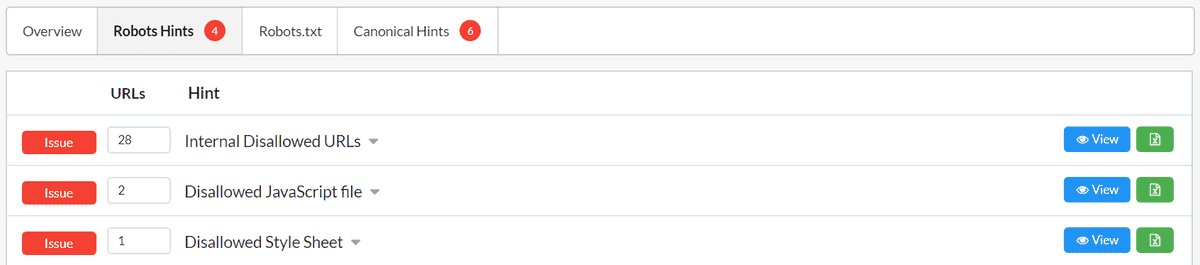

Robots.txt files can cause major issues in terms of crawling and indexing, as wayward disallow rules can prevent big chunks of a website from even being crawled. Sitebulb will tell you every single URL that is affected by the robots.txt file, and even pick out the specific robots.txt rule triggered.

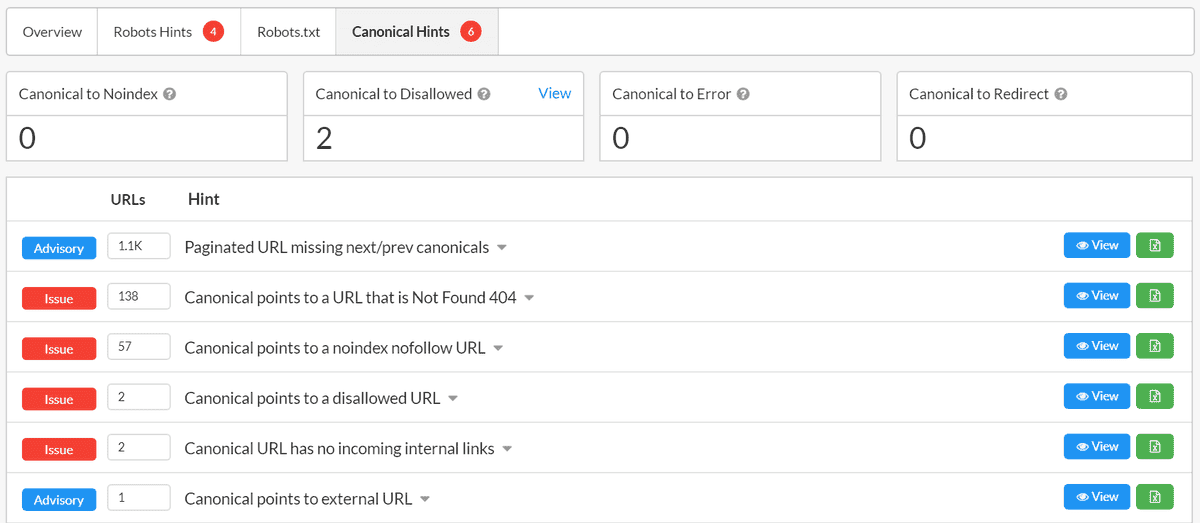

Quickly identify canonical tag issues

Sitebulb will allow you to quickly check any pages with canonical tags that are not self-referential, so you can double check these are pointing to the right URLs.

Further, it will check for canonical configuration issues, such as duplicate declarations or malformed URLs, and identify inconsistencies caused by compound robots rules – such as canonical tags that point at noindexed URLs.

Avoid potential problems from duplicate robots declarations

Robots directives can be specified in 3 different locations – in the HTML <head>, the HTTP header, and on the robots.txt file. This can lead to multiple directives for a single URL, which potentially also leads to conflicting directives. These type of inconsistencies are typically very hard to identify manually, and can cause massive problems if left unchecked.

Sitebulb will pull all these rules together and automatically check them all, picking out the specific issue and all URLs affected.